Storage(SW)

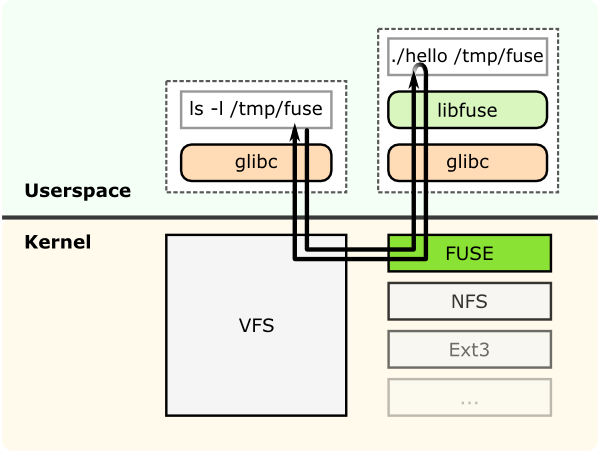

FUSE

Compare

https://www.redhat.com/en/technologies/storage CEPH: Provides a robust, highly scalable block, object, and filesystem storage platform for modern workloads, like cloud infrastructure and data analytics. Consistently ranked as preferred storage option by OpenStack® users. Gluster: Provides a scalable, reliable, and cost-effective data management platform, streamlining file and object access across physical, virtual, and cloud environments.

http://cecs.wright.edu/~pmateti/Courses/7370/Lectures/DistFileSys/distributed-fs.html

HDFS

iRODS

Ceph

GlusterFS

Lustre

Arch

Central

Central

Distributed

Decentral

Central

Naming

Index

Database

CRUSH

EHA

Index

API

CLI, FUSE

CLI, FUSE

FUSE, mount

FUSE, mount

FUSE

REST

REST

REST

Fault-detect

Fully connect.

P2P

Fully connect.

Detected

Manually

sys-avail

No-failover

No-failover

High

High

Failover

data-aval

Replication

Replication

Replication

RAID-like

No

Placement

Auto

Manual

Auto

Manual

No

Replication

Async.

Sync.

Sync.

Sync.

RAID-like

Cache-cons

WORM, lease

Lock

Lock

No

Lock

Load-bal

Auto

Manual

Manual

Manual

No

http://www.youritgoeslinux.com/impl/storage/glustervsceph

Gluster - C

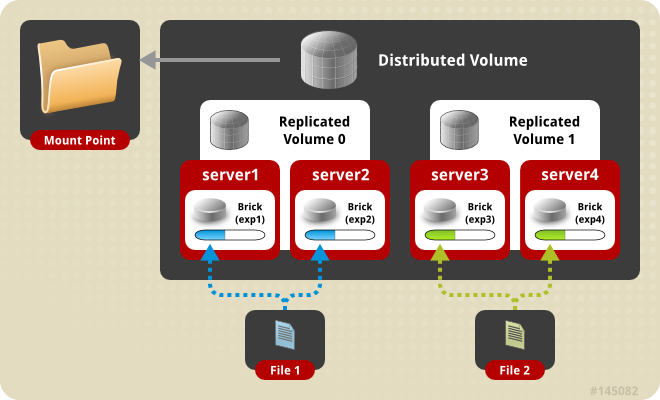

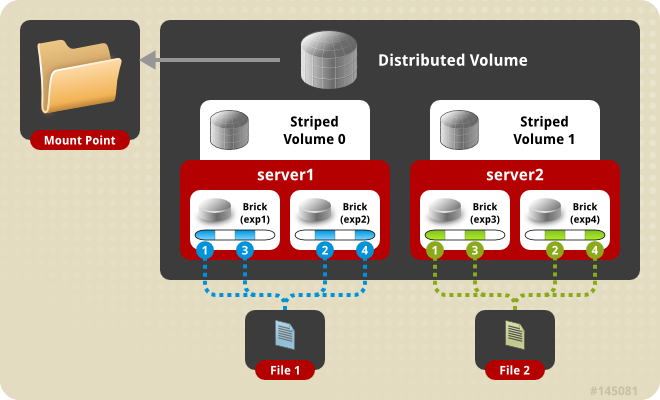

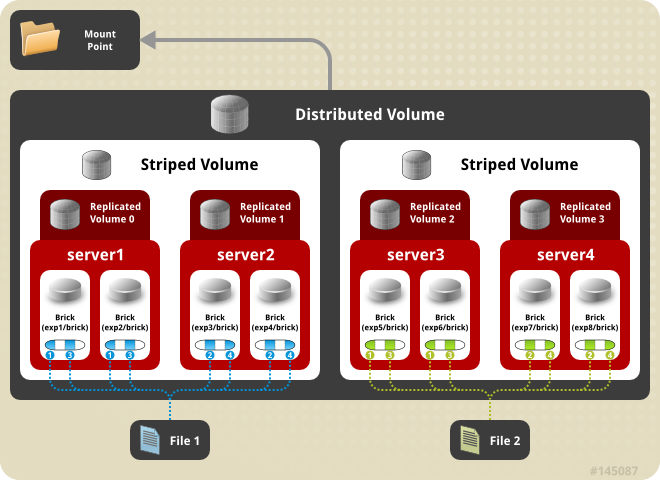

software defined distributed storage that can scale to several petabytes. It provides interfaces for object, block and file storage. http://docs.gluster.org/en/latest/Quick-Start-Guide/Architecture/

Quickstart

http://docs.gluster.org/en/latest/Quick-Start-Guide/Quickstart/

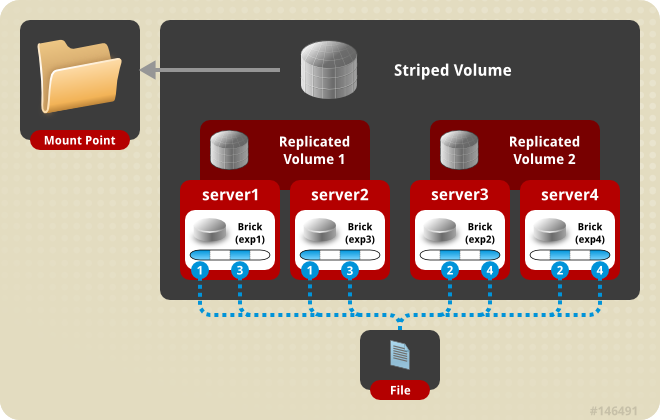

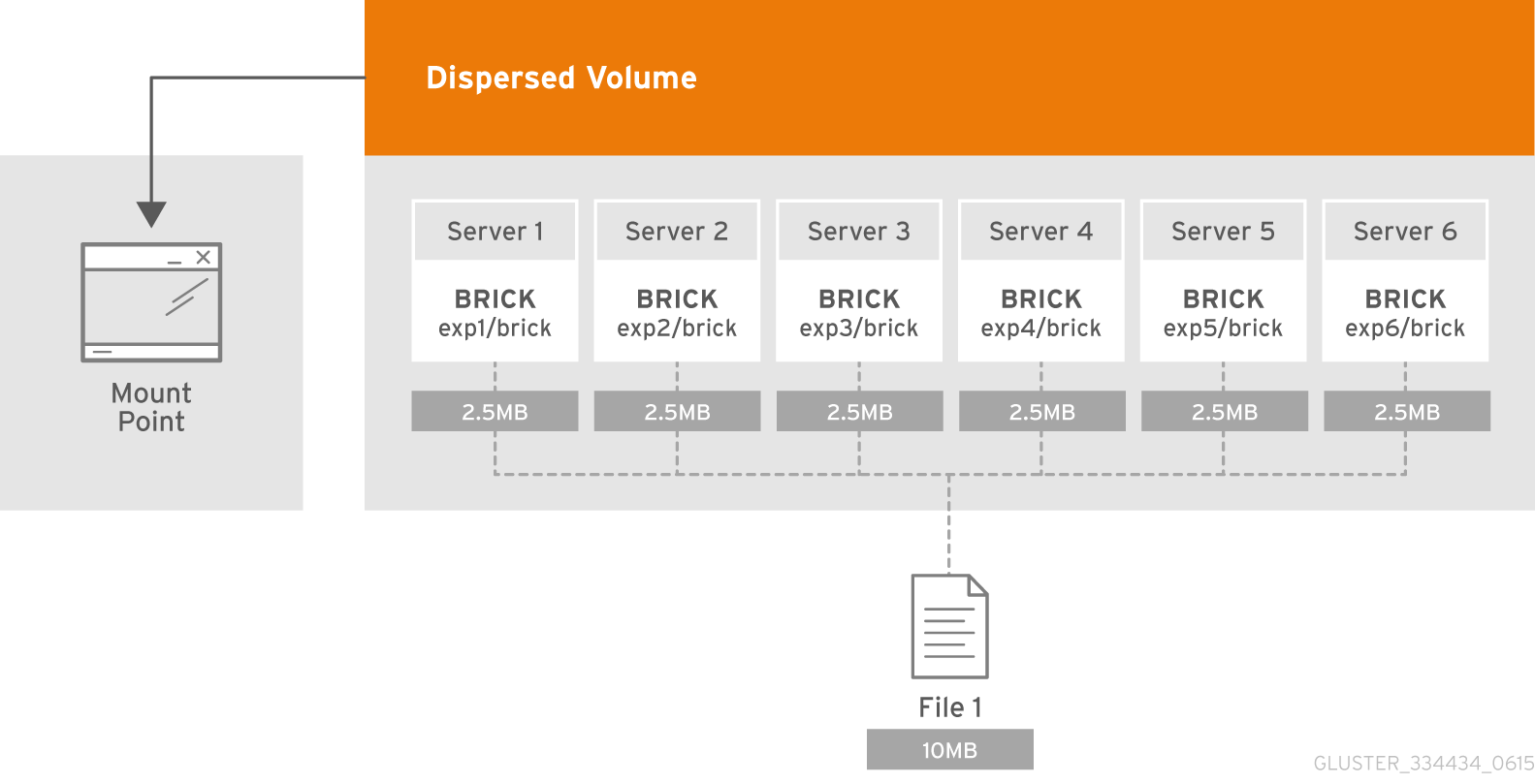

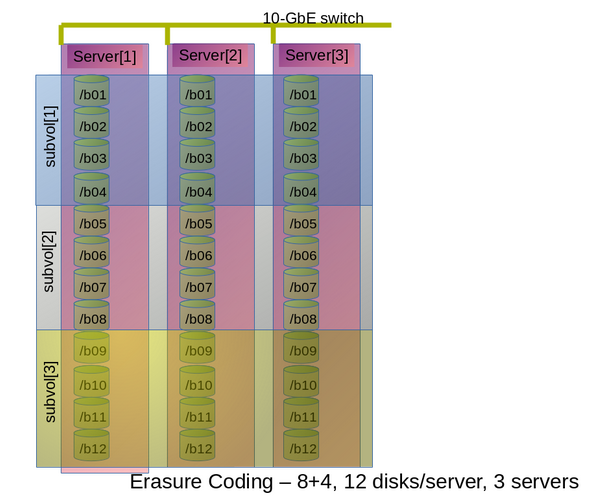

Types of Volumes

RDMA(Remote direct memory access)

http://docs.gluster.org/en/latest/Administrator Guide/RDMA Transport/

As of now only FUSE client and gNFS server would support RDMA transport.

Snapshots

http://docs.gluster.org/en/latest/Administrator Guide/Managing Snapshots/ GlusterFS volume snapshot feature is based on thinly provisioned LVM snapshot.

on ZFS

http://docs.gluster.org/en/latest/Administrator Guide/Gluster On ZFS/

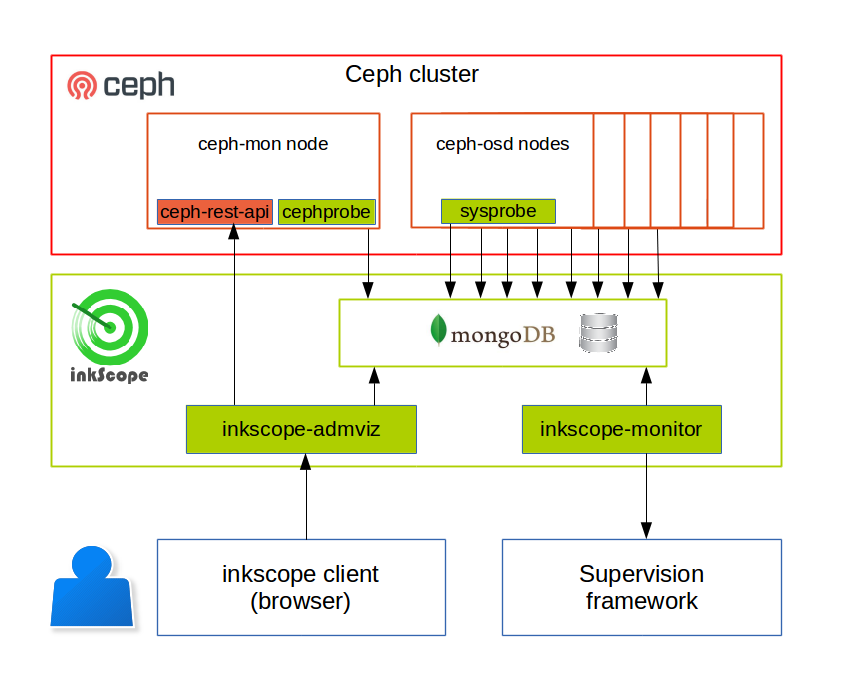

Ceph - C++

Ceph uniquely delivers object, block, and file storage in one unified system. A Ceph Storage Cluster consists of two types of daemons:

Ceph Monitor: maintains a master copy of the cluster map

Ceph OSD Daemon: checks its own state and the state of other OSDs and reports back to monitors.

Setup

http://docs.ceph.com/docs/master/cephfs/

Docker

CACHE TIERING

Snapshot

http://docs.ceph.com/docs/master/rbd/rbd-snapshot/ Ceph supports many higher level interfaces, including QEMU, libvirt, OpenStack and CloudStack. Ceph supports the ability to create many copy-on-write (COW) clones of a block device shapshot. Snapshot layering enables Ceph block device clients to create images very quickly.

UI - inkscope

https://github.com/inkscope/inkscope (with screenshots)

Rook - Go

File, Block, and Object Storage Services for your Cloud-Native Environment

https://rook.github.io/docs/rook/master/kubernetes.html

IPFS

Backup

duplicati

https://github.com/duplicati/duplicati https://www.duplicati.com/screenshots/ Store securely encrypted backups in the cloud! Amazon S3, OneDrive, Google Drive, Rackspace Cloud Files, HubiC, Backblaze (B2), Amazon Cloud Drive (AmzCD), Swift / OpenStack, WebDAV, SSH (SFTP), FTP, and more!

Last updated